The Mobile Artificial Intelligence Revolution: Major Players and Future Trends

The mobile Artificial Intelligence (AI) revolution is in full force and it doesn’t look like it is going to slow down any time soon. Phone manufacturers are in a hurry to reap the benefits of generative AI, which was first made popular by OpenAI, and has since sparked the interest of virtually every industry.

From the newly launched Apple Intelligence features to Google, Amazon, and Microsoft’s recent advancements in the sector, there’s a lot happening when it comes to AI technology for smartphones. In fact, it is thanks to AI that leading market analysts predict a rebound in smartphone sales starting in 2024, despite widespread concerns about an extended downturn in the mobile sector.

But while there is a lot of excitement in the area, we have a long way to go before we can say that the mobile AI technology has matured. There is still no consensus among industry professionals on what the definition of an AI smartphone is. Most experts, however, agree that these devices will have more advanced chips to run AI applications, and those AI apps will operate on device rather than in the cloud.

For software company owners and mobile app developers, now is the right time to develop a solid understanding of AI technology for smartphones. Gaining early knowledge and expertise can provide a significant first-mover advantage in a highly-competitive and rapidly evolving market.

In this article, we will provide you with a brief history of mobile AI development from 2007 to the present day, outline the major players in the industry today, and give our 5 cents on what we think will happen in the future.

The Introduction of AI in Smartphones: How Everything Started (2007-2012)

2007—when the first iPhone was introduced—is perhaps the year that marked the start of the mobile AI revolution. Smartphones back then primarily focused on internet access and multimedia capabilities, with basic AI features like predictive text and voice recognition. These early advancements, however, paved the way for the many AI developments in the years to come.

Key moments:

In 2011, Apple introduced Siri , the first widely known AI-powered virtual assistant, with the iPhone 4S. Siri relied heavily on cloud processing but laid the groundwork for AI integration in smartphones, with features like predictive text and basic voice recognition. Siri’s success pushed other companies to speed up AI development, which led to better mobile AI features by 2012 such as improved voice recognition, personalized recommendations, and contextual awareness.

In 2012, Google launched the Google Now feature , which provided predictive information based on user data. Like Siri, it primarily used cloud processing. Google Now leveraged vast amounts of data from various Google services, such as search history, emails, and calendar, to deliver relevant updates and recommendations, like traffic conditions before a commute or flight status before a trip.

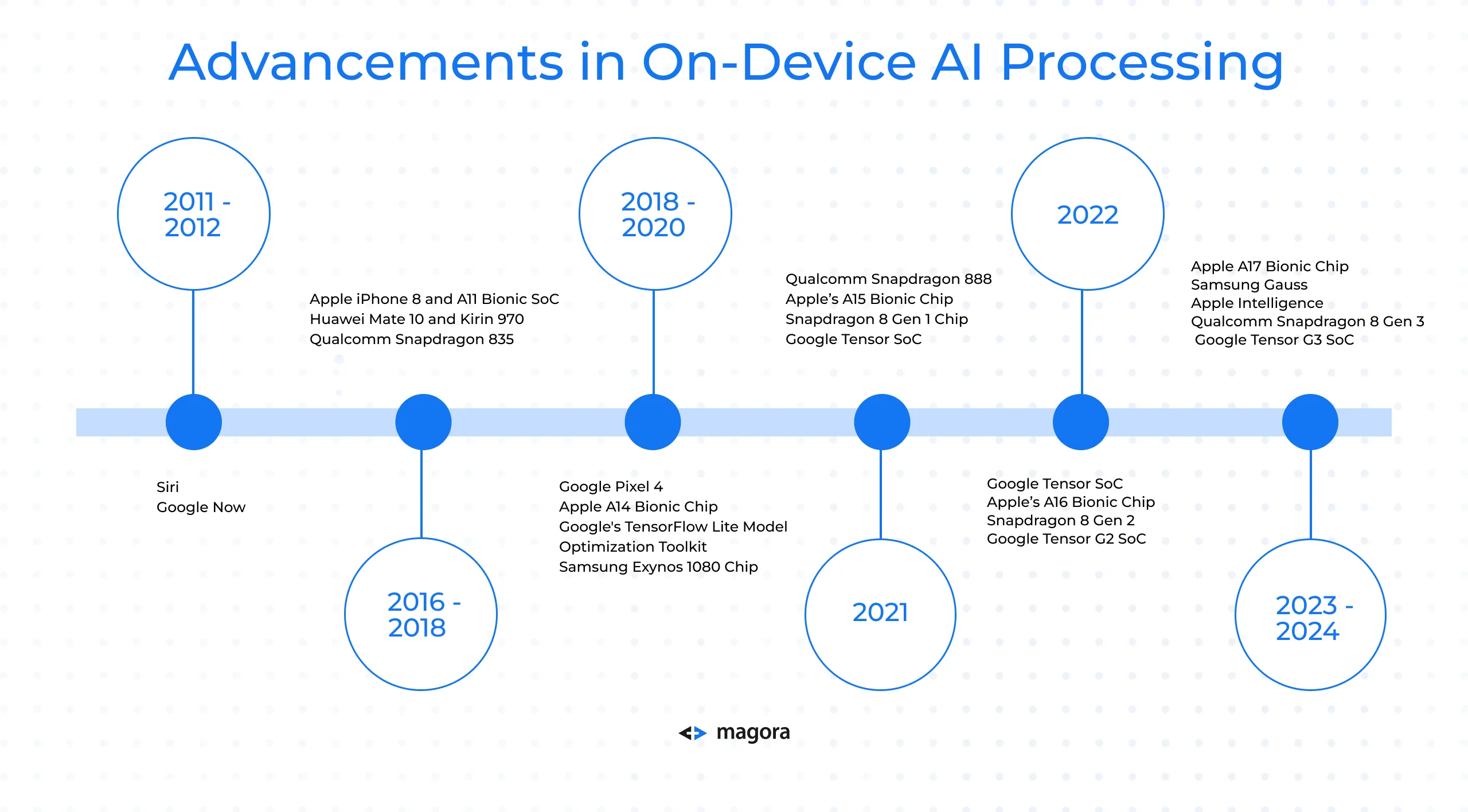

Advancements in On-Device AI Processing (2016-2018):

When we talk about mobile AI’s potential to progress, it is important to discuss the developments surrounding on-device AI processing. This term refers to smartphones’ ability to perform machine learning and AI tasks directly on the phone’s hardware, without the need for external cloud servers. On-device AI processing, also known as Edge AI, is crucial for the speed, security, personalization, and efficiency of the AI technology.

In 2017, Apple introduced the iPhone 8, powered by the A11 Bionic System on a Chip (SoC) , which included a dedicated Neural Engine. This Neural Engine enabled the iPhone to perform complex AI-driven tasks directly on the device, such as Face ID, which uses machine learning to recognize faces securely, and Animoji, which uses real-time facial recognition to create animated emojis.

That same year, Huawei released the Mate 10 with the Kirin 970 processor, which included a dedicated Neural Processing Unit (NPU). This allowed for enhanced AI capabilities for tasks such as image recognition, voice processing, and real-time translation.

Qualcomm launched the Snapdragon 835 mobile platform in 2017, which used AI to handle tasks like voice recognition and image processing, while also improving battery efficiency and overall performance for on-device AI tasks. The Snapdragon 835 was widely used in flagship Android devices, and supported applications in augmented reality (AR) and virtual reality (VR).

Advancements in On-Device AI-Processing (2018-2020)

New developments between 2018 and 2020 further improved on device AI-processing, allowing smartphones to handle increasingly complex AI tasks.

In 2019, Google launched the Pixel 4 smartphone , which introduced the Pixel Neural Core, a dedicated AI processor with enhanced on-device AI capabilities for photography and voice recognition. The Pixel Neural Core’s advanced computational photography, including HDR+ and Night Sight, allowed for better photo quality even in challenging conditions. Additionally, it enabled more accurate and responsive voice recognition, making interactions with Google Assistant smoother and more intuitive.

In 2020, Apple set a new standard for on-device AI processing with the release of the A14 Bionic chip . This chip featured a 16-core Neural Engine capable of performing 11 trillion operations per second. The A14's great processing power facilitated a variety of machine learning tasks across the device, from enhancing photo and video quality through advanced computational photography to powering AI-driven apps and features with unparalleled speed and efficiency.

Recent Developments in On-Device AI Processing (2021-Present):

In recent years, on-device AI processing has seen major progress, with leading tech companies using enhanced machine learning capabilities and large language models to deliver more personalized, real-time experiences while maintaining user privacy and efficiency.

In 2021, Qualcomm introduced the Snapdragon 888 with its 6th gen Qualcomm AI engine . This new AI engine brought significant improvements in AI performance that allowed for more complex AI tasks to be processed directly on the device. The Snapdragon 888 enabled enhanced features across a variety of applications, including photography, gaming, and augmented reality.

The following year, Google launched its custom Tensor SoC with the release of the Pixel 6 series . The Tensor SoC was designed with a strong focus on AI and machine learning and significantly improved the performance of features like voice commands, real-time translation, and computational photography. The Tensor SoC allowed for more personalized and intuitive user experiences by enabling complex AI processing directly on the device.

In 2023, Apple continued to lead in on-device AI innovation with the introduction of the A17 Bionic chip . It offered advanced features such as Live Text, which allows users to interact with text in images and videos, and improved Siri's responsiveness and accuracy.

Same year, Samsung announced Samsung Gauss , its own large language model (LLM). Gauss comprises three models—Gauss Language, Gauss Code, and Gauss Image—designed to generate text, code, and images. This move positions Samsung Gauss as a competitor to existing AI models like ChatGPT and represents a significant step forward in bringing advanced AI capabilities directly to consumer devices.

In 2024, Apple launched the Apple Intelligence system, an evolution of its AI and machine learning framework that integrates with the latest iOS devices. Apple Intelligence builds on the advancements made with the A17 Bionic chip, further enhancing the Neural Engine's capabilities to deliver more powerful and intuitive user experiences. The system uses AI to allow for better user interactions. It refines Siri’s contextual understanding, improves real-time translation, offers more personalized suggestions across apps, and brings enhanced image editing capabilities.

With Apple Intelligence, the company has taken a significant step towards creating a more connected and intelligent ecosystem, where AI not only processes tasks efficiently but also anticipates user needs with greater accuracy and speed. Read our recent article to learn more about the innovations that Apple Intelligence brings.

Notable Mobile AI Apps

This on-device AI progress is reflected in the growing number of mobile apps that use this technology to enhance user experience. Let’s take a look at some of the major mobile AI apps:

Google AI:

-

Google Assistant: Google Assistant is a widely used virtual assistant available on both Android and iOS devices. Its voice interaction allows users to perform tasks such as setting reminders, sending messages, controlling smart home devices, and conducting searches using natural language. Google Assistant leverages AI and machine learning to understand and respond to user queries more accurately over time.

-

TensorFlow Lite: TensorFlow Lite is a lightweight version of Google's popular TensorFlow framework. It was specifically designed to run machine learning models on mobile and embedded devices. With it, developers can deploy AI models on mobile apps, allowing the device to quickly analyze data and make decisions on the spot. This is important for tasks like image recognition, speech processing, and augmented reality.

-

Google Cloud AI: Google Cloud AI provides a suite of machine learning and AI services that can be integrated into mobile applications. These services include pre-trained models for natural language processing, vision, and translation, as well as tools for custom model training. Mobile developers can leverage Google Cloud AI to add sophisticated AI capabilities to their apps without the need to build models from scratch.

Microsoft AI:

-

Cortana: Cortana is Microsoft's virtual personal productivity assistant, which is available on Android and iOS devices. Cortana offers voice interaction and deep integration with Microsoft services like Outlook and Office 365, which makes it a useful tool for managing tasks, scheduling meetings, and accessing information on the go.

-

Azure AI Services: Microsoft also offers a range of AI services through its Azure cloud platform that can be integrated into mobile apps. These services include Azure Cognitive Services , which provides APIs for speech, language, vision, and decision-making tasks. Mobile developers can use these tools to build intelligent applications for tasks such as real-time language translation, speech recognition, image analysis, and personalized user recommendations.

Amazon AI:

-

Alexa: Alexa, Amazon's voice assistant , is available on mobile devices through the Alexa app. It provides users with voice interaction capabilities, allowing them to control smart home devices, play music, set reminders, and more. Alexa's integration with a wide range of third-party services and devices makes it a central hub for managing smart environments via mobile.

-

AWS AI Services: Amazon Web Services (AWS) offers a suite of AI tools, including Amazon Lex, an AI service for building conversational interfaces. These services enable apps to handle natural language processing, sentiment analysis, and chatbot functionality.

IBM Watson:

-

Watson Assistant: The IBM watsonx Assistant can be integrated into mobile applications to provide conversational AI capabilities. It is designed to understand user queries in natural language and provide accurate, context-aware responses. This makes it ideal for customer service apps, virtual assistants, and other interactive mobile experiences.

-

Watson Studio: While primarily a cloud-based development environment, models built in Watson Studio can be deployed on mobile apps. Watson Studio allows developers to create, train, and optimize AI models, which can then be integrated into mobile applications to provide predictive analytics, decision support, and other functionalities.

Meta AI:

-

PyTorch Mobile: PyTorch Mobile is a framework that allows PyTorch models to run efficiently on mobile devices. With it, developers can integrate complex AI features into their mobile applications, such as real-time object detection, image classification, and natural language processing. PyTorch Mobile's user-friendliness, flexibility and performance make it a popular choice for building AI-powered mobile apps, particularly in the research and development community.

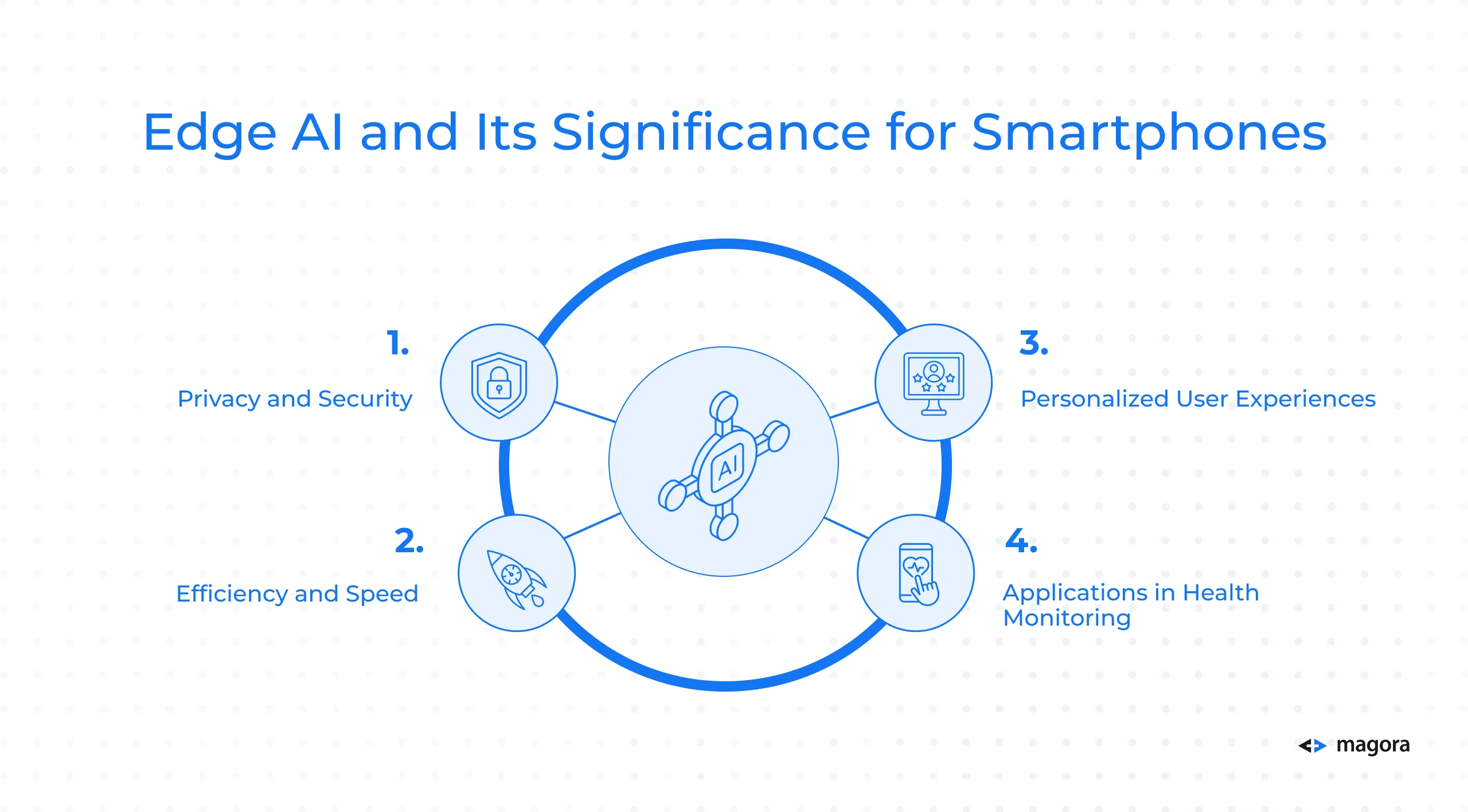

Edge AI and Its Significance for Smartphones

Throughout this article, we’ve often discussed the importance of on-device AI processing as opposed to processing on the cloud. Let’s take a deeper look at what on-device AI means and why it is so crucial for the development of mobile AI technology.

On-device AI, also known as Edge AI, refers to the deployment of artificial intelligence algorithms directly on local devices, such as smartphones, sensors, or Internet of Things (IoT) devices. This allows for real-time data processing and analysis on the device itself, without the need to constantly rely on cloud-based infrastructure.

In essence, Edge AI combines the principles of edge computing and artificial intelligence. Edge computing involves storing and processing data close to the device where it is generated, rather than sending it to a distant data center or cloud. When AI algorithms are applied to this locally stored data, machine learning tasks can be executed directly on the device, often without requiring an internet connection. This setup facilitates rapid data processing, enabling real-time responses that are critical in many applications.

Here are the main advantages of Edge AI for smartphones:

Privacy and Security

One of the primary benefits of Edge AI on smartphones is enhanced privacy. By processing data directly on the device, sensitive information does not need to be transmitted to remote servers, reducing the risk of data breaches or unauthorized access. This is particularly crucial for applications involving personal data, such as health monitoring or financial transactions, where users are highly concerned about the security of their information.

Efficiency and Speed

Edge AI also improves the efficiency and speed of AI tasks. Since data is processed locally, there is no need to send information back and forth to the cloud, which can slow down the process. This allows for real-time processing, which is essential for applications like real-time language translation. For example, a smartphone equipped with Edge AI can instantly translate spoken words into another language.

Personalized User Experiences

Edge AI also facilitates personalized user experiences by learning and adapting to individual user behaviors. For instance, it can optimize battery usage, enhance camera performance, or recommend content based on the user's preferences. Since these processes occur on the device, they can be tailored to the specific needs and habits of the user, bringing a more satisfactory experience.

Applications in Health Monitoring

Edge AI holds a huge potential for health monitoring, as it enables continuous, real-time analysis of data such as heart rate, glucose levels, or sleep patterns. This can lead to more accurate and timely health insights, allowing users to take proactive measures based on their health data. By keeping this sensitive data on-device, Edge AI ensures privacy and enhances user trust, which encourages the adoption of health-related applications.

On-Device Mobile AI: Future Trends

On-device mobile AI is becoming more and more sophisticated, driving innovation in how we interact with our devices and the world around us. As AI chips continue to evolve, we can expect even more powerful and energy-efficient processing that will enable real-time, complex tasks like advanced augmented reality experiences, seamless natural language conversations, and personalized health monitoring—all without relying on the cloud.

In the near future, we are also likely to see a better integration of AI across interconnected devices, where smartphones, wearables, and smart home devices all work together to anticipate and respond to user needs with unprecedented accuracy and speed.

This shift towards more decentralized, sophisticated artificial intelligence will pave the way for entirely new categories of AI-driven applications beyond our imagination.

Our experts at Magora will help you harness the power of mobile AI to build an innovative app tailored to your specific needs. We specialize in developing customized solutions and ensure that every aspect of the app is aligned with your users’ preferences and operational goals.

From concept to completion, our mobile app development company will guide you through every phase of development, ensuring your project is on the right track from the very start. We offer comprehensive support in building innovative, high-performance apps that enhance your operational efficiency and deliver superior user experiences.

Let us help you transform your vision into reality. Contact us today to learn more about our bespoke app development services and how we can help you leverage mobile AI capabilities to achieve your business objectives.